AI in automated defence

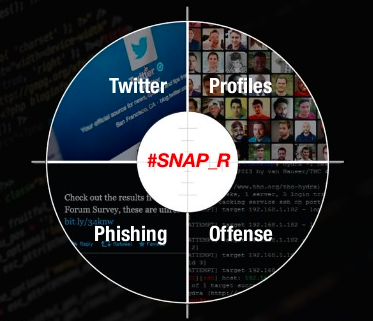

There are plenty of resources that show AI techniques being already used in combating cyber crimes. This section will briefly present related work and some existing applications of AI techniques to cyber security.

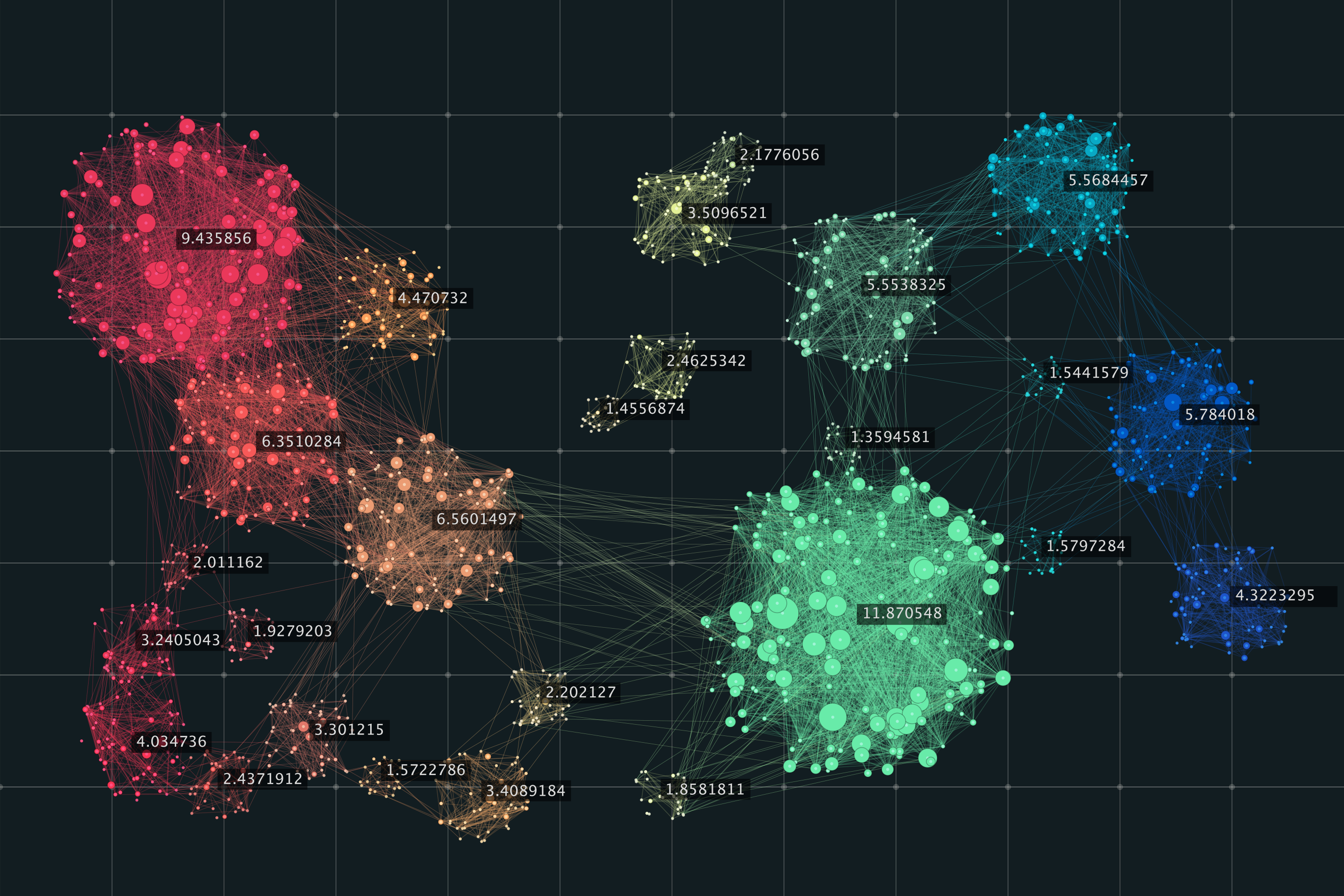

Vectra’s AI-based threat detection and response platform Cognito collects and stores network metadata and enriches it by adding unique security insights. This metadata along with machine learning techniques are used to detect and prioritize attacks in real time. Cognito has helped detect and block multiple man-in-the-middle attacks, and halt cryptomining schemes in Asia. Cognitoalso also managed to find command-and-control malware that had been hiding for several years.

With the rapid growth in the use of personal mobile devices, there is a need now more than ever for automated security techniques to keep up with the rapid growth. Zimperium and MobileIron Zimperium and MobileIron help organizations adopt mobile anti-malware solutions incorporating artificial intelligence. The integration of Zimperium’s AI-based threat detection with the MobileIron’s compliance and security engine can tackle challenges such as network, device, and application threats.Organizations can use this AI-based threat detection software to protect personal mobile devices. AI-based crime analysis tools like the California-based Armorway use AI and game theory to predict terrorist threats. The Coast Guard happens to be a user of this technology with Armorway being used for port security in Los Angeles, Boston and New York.

Darktrace’s Enterprise Immune System is based on machine learning technology. The platform uses machine learning to model the behaviors of every device, user, and network to learn specific patterns. Darktrace automatically identifies any anomalous behavior and alerts the companies using its software in real time. Energy Saving Trust was one such company using Darktrace software that was able to detect numerous anomalous activities as soon as they occurred and alert their security team to carry out further investigations, while catching the threat early and mitigating any risk posed before real damage is done.

Paladon’s threat hunting service leverages data science and machine learning capabilities to create cybersecurity software. This tackles cyber security issues such as data exfiltration, advanced targeted attacks, ransomware, malware, zero-day attacks, social engineering, and encrypted attacks. Paladon’s AI-based Managed Detection and Response Service (MDR) service is used by the global bank in advancing threat detection and response capabilities.

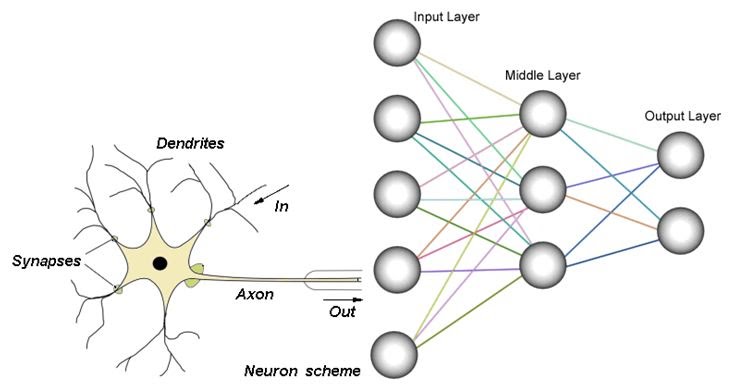

An interesting application of neural networks is security screening. To assist in security screening, the United States Department of Homeland Security has developed a system called AVATAR that screens body gestures and facial expressions. Neural networks are used by AVATAR to pick up minute variations of facial expressions and body gestures that may raise suspicion. The system uses a virtual face that asks questions. It monitors how answers as provided as well as differences in voice tone. Since the neural network is trained on a dataset of answers and tones of people who are known to lie and know to tell the truth, the answers from a specific individual are compared against elements that indicate that someone might be lying. This could be used in a myriad of security settings to flag people suspected of lying to be further evaluated by human interrogators.