Iris

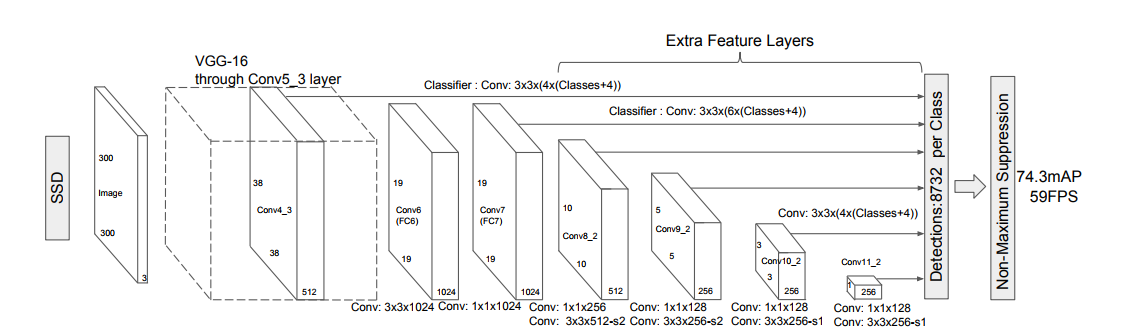

Iris is an object detection and image recognition android application. The app was built as a month long, self-assigned class project for the Intro to Mobile Application Development course. The goal of the project was to implement all the mobile application development concepts learnt over the course, along with a machine learning component as a personal goal. The applications basic UI elements and use of the camera covered the mobile application development portion of the project. The applications use of two machine learning model, one for object detection and one for image recognition covered the machine learning aspect of the project. The models were pre trained Tensor Flow Lite's Convolutional Neural Networks that were optimized to run on a mobile device.

- Time period: May 2018

- Project Type: Class Project

- Github: Project Link